The answer turned out to be the latter, but along the way I noticed a curious oversight in the iOS security model.

iOS works works hand-in-hand with iTunes to backup the contents of an iPhone / iPad / iPod. By connecting an iOS device to the USB port of a PC or Mac running iTunes, it is possible to make a (nearly) complete backup of the files stored on that device. I say nearly because Apple wisely prevents backing up passwords and private health-related data unless the archive is encrypted.

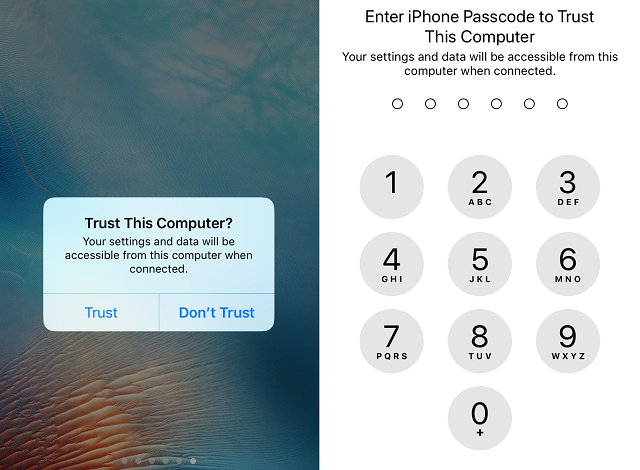

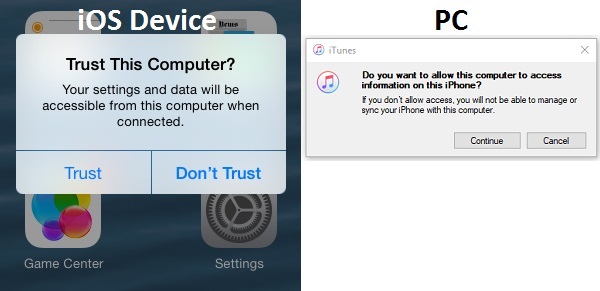

When connecting an iOS device to a PC to which the device has not been previously synced, both the device and iTunes will require confirmation that you do in fact want each to trust the other.

That's a great idea: "trust" implies a lot. A trusted computer is allowed to copy information to and from the iOS device - uploading pictures, downloading music, installing and backing up apps, syncing contacts.

What is missing from this picture though?

Enabling the iOS device to trust a new computer was a one-click operation - never was I asked to provide credentials to approve the trust relationship. As long as the iOS device was logged in and not screen locked, one click was enough to tell the iPhone or iPad that this computer can be trusted.

While this is a relatively minor risk, I can think of a fairly common scenario in which this model could be abused.

Have you ever lent your phone to a friend so they could make a brief phone call?

A decade or so ago, a cell phone did little more than make phone calls. If I borrowed a friend's phone, I might be able to make an unexpected toll call, or perhaps swipe a private phone number from their contacts, but that was about it. Modern smartphones though are treasure troves of private information - photos, email, SMS history, browser history, cached login information, apps with direct access to financial accounts - the list goes on.

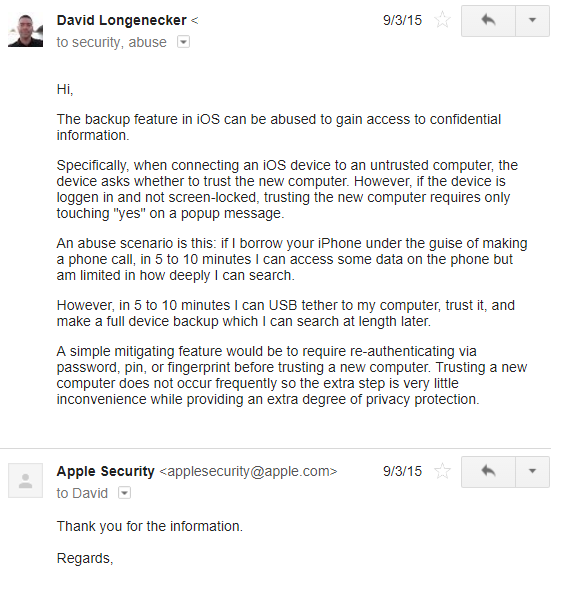

If I borrow your iPhone under the guise of making a phone call, in 5 to 10 minutes I can access some data on the phone but am limited in how deeply I can search.

However, in 5 to 10 minutes I can USB tether to my computer, trust it, and make a full device backup which I can search at length later. Or in just a few seconds I can establish that device trust now, and later slip it off your desk to make a backup of the then-locked iPhone.

I wrote at the time that a simple mitigating feature would be to require re-authenticating via password, PIN, or fingerprint before trusting a new computer. Trusting a new computer does not occur frequently - in fact, with any given device, you likely only do it once or twice over the lifetime of that device - so the extra step is very little inconvenience while providing an extra degree of privacy protection.

I also provided that input to Apple (typo and all), and got an incredibly thorough reply:

Ah, but now, two years later, it seems my suggestion has come to fruition: iOS 11 behaves exactly as I suggested it should.

Connecting an iPhone, or iPad, to an untrusted PC still displays the "Trust / Don't Trust" dialog - but it is now followed by a prompt to enter your PIN to complete the trust operation.