The growth of the Internet from a novel idea into a business necessity created a new market for online service providers. Large corporations have the resources to run their own web servers and to hire professional staff to keep them running well and (hopefully) secure. When you run a small business though - and in particular, a business that is not in a computer technology field - more often than not you are dependent on third parties to provide such services. If your company is in the business of collecting and disposing of garbage, you might expect to invest heavily in trucks and landfill property. A company web site through which to offer online bill payment may not be at the top of your in-house priority list.

There's absolutely nothing wrong with that.Why try to be something you are not? Doing what you do, well, and paying someone else to do the rest can be an effective business model. Alas, outsourcing isn't (or at least shouldn't be) a "choose someone and forget about it" decision.

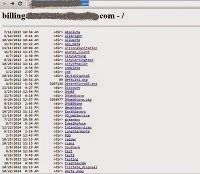

In December 2014, I was updating some passwords and happened upon an outdated link in my password manager. Lastpass remembers not only the username and password for various websites, but also the exact link to the last-known login page. In this case, my trash service had moved to a new company for billing, but Lastpass still pointed to the previous location. The same principle would apply if I had saved a bookmark to the billing page. Ordinarily that wouldn't be a problem - either the business would set up a redirect to the new page, or an invoice or bill might state the new URL. In this case, I followed the link to my trash service, and to my surprise saw this:

That can't be good.

As an application hosting service, you almost never want to enable "directory traversal," the ability for an Internet guest to access your raw files and directories. You want to control what the end user sees - you want them to see a web page or a login screen, something that gives the customer confidence in your web application. You also want to guide them through your online content, rather than having to hunt through a long list of confusing options.

As a seasoned IT administrator and security practitioner, this screen has a different meaning to me. It tells me that the company missed a pretty basic configuration step, and thus may well have made other security mistakes. In one glance I have a good idea who else uses this hosting provider. More damaging, I see a few files that are of great interest to me.

DMWebEcova.zip catches my attention. I don't know what it is, but a 3 megabyte zip archive has the potential to contain valuable information. The same is true of DesertMicroQS.exe. The App_Data folder is a common catch-all location for application data on a Microsoft Windows server and might contain any number of things ... perhaps Outlook .OST and .PST files, the email archives that were so embarrassing to certain individuals at Sony. Scrolling down, I see web.config and global.inc, configuration files common to Microsoft web applications (known as ASP and .NET). The latter file in particular often holds login credentials for databases.

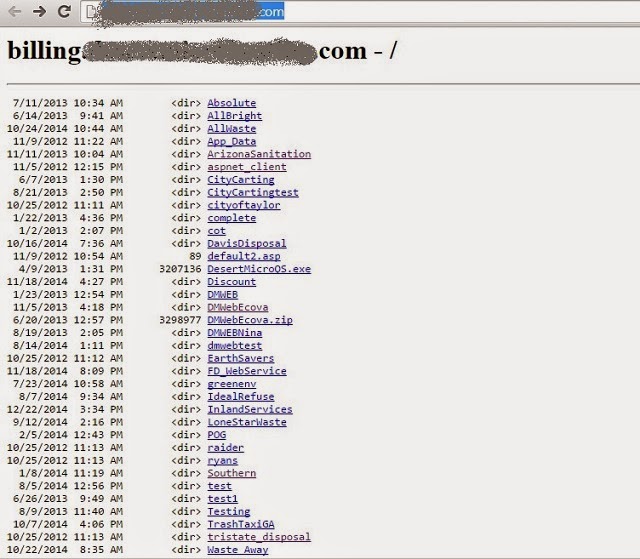

Following the links into the folders, most open into client websites or demo sites - things one would expect to be publicly available. But one folder contains this:

As you might imagine, these contain passwords. Passwords that could have something to do with administering the web services. The last file is a .pem - the filename convention for a certain type of encryption certificate - not the sort of thing you want to have laying around on a public web site. A private encryption certificate is the piece that you want to keep a secret, so that only you can read messages or secured web traffic sent to you. If someone else obtains that certificate, they too can read secured web traffic intended for you.

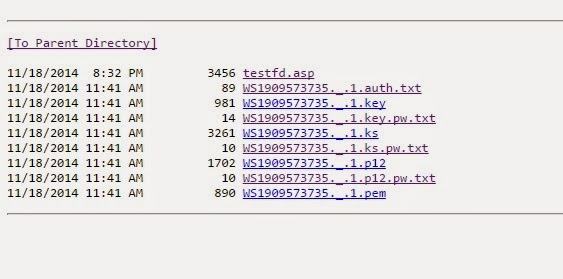

Following another link reveals the following error message - yet another web application no-no:

The web server is configured to give detailed error messages, rather than a simple "file not found" message. To a penetration tester (or malicious hacker), error messages such a this are a gold mine: they reveal the type and version of the web server (Microsoft Internet Information Server version 7.0, also known as IIS 7), from which one can deduce possible well-documented security flaws to exploit. Further, this message reveals the physical file path - c:\inetpub\wwwroot\billing\ - which might be useful in crafting the next step in a potential web attack.

Going further down this path, though, is unnecessary (and would be unwise). Without permission from the hosting service, probing for and exploiting software vulnerabilities can run afoul of computer abuse laws. However, viewing files placed publicly on a web server - even if they should not have been - does not (today).

As I said earlier, the file DMWebEcova.zip caught my attention. Opening it up reveals several files of great interest:

Included in this zip file are more encryption certificates, (poorly-chosen) database passwords, server administration passwords, and a Microsoft Access 97 database. Which contains customer records. With some payment card numbers. Some of which have date and time stamps that suggest they were from 2004 and 2005. The customers of this trash service had their information put at risk by poor security practices at a web hosting service they used ten years ago. Wow.

Perusing files left on a public web server is within current US law, but credit and debit cards are not something I want to mess around with, so at this point I deleted everything from my local cache and looked for whom to contact.

I was ultimately able to reach the affected company and report the exposed information. The website configuration problem that exposed this information in the first place has been corrected. That in fact was part of the reason I looked into the zip file - much like you might open a lost purse or wallet looking for a name and address to whom to return the lost item, I was looking for something to identify the business whose information was exposed so I could let them know. The particular company no longer did business under that name, but there were some phone numbers and addresses that ultimately led me to the right place.

This event is nearly identical to one reported by SC Magazine in February. SC Magazine reported that Texas-based Lone Star Circle of Care had created a backup of the entire website, and inadvertently placed that backup copy on the website where it could be downloaded. In that case, names, addresses, phone numbers, birthdates, and social security numbers for some 8,700 individuals were exposed and accessed "numerous times by unauthorized individuals."

I find several lessons here.

For the small business owner, consider the "exit strategy" when establishing a business relationship with a new service provider. When beginning a new business relationship, consider how that relationship should end - and specifically, what will happen to any company proprietary information at that time. Ensure your contracts clearly spell out how any information will be stored, or returned, or destroyed. Different forms of information may be subject to different retention requirements - but there is no excuse for a service provider to still have the credit cards from customers of a business who ceased using that service provider a decade ago.

For the consumer, the biggest lesson is you really don't have any control over how your information is used (or misused) once you entrust it to a business. That your information could be taken from a company you have not used in over a decade is disturbing, but doesn't really change anything. Use credit cards - not debit cards - for online transactions (US consumer protection is stronger, and the inconvenience to you while resolving a breach is less painful, with credit cards). If you cease doing business with a company, ask that they delete any payment information for you. And keep an eye on payment accounts for any unexpected transactions. While you can't always prevent the breach, you can minimize the inconvenience by catching it quickly and canceling an account.

---

After writing this, but before publishing, I attended the B-Sides Austin security conference, where security analyst Wendy Nather gave a talk on "Ten crazy ideas for fixing security." One of the crazy ideas was that data should have an expiration date. That led to a Twitter conversation with author and long-time security manager Davi Ottenheimer, and ultimately led me to several researchers that wrote a paper on an innovative way to address this type of problem.

The basic premise behind "Vanish" is that data has a built-in expiration date. This is done by encrypting the data, and breaking the decryption key into pieces that are stored in a way that degrades over time. Before the prescribed expiration date, the key can be re-assembled and used to decrypt the original data; after the expiration period though, the key is degraded to the point that it can no longer be used to decrypt the original data, leaving the data unrecoverable.

A system such as this looks quite appealing in light of the breaches at Anthem and Premera. Stolen credit cards are easy to replace, but stolen identities are not. Birthdates don't change, and social security numbers don't expire. Storing data in a way that the data has a finite shelf life could go a long way toward limiting the damage caused by a breach.